China plans AI rules to protect children and tackle suicide risks

Osmond ChiaBusiness reporter

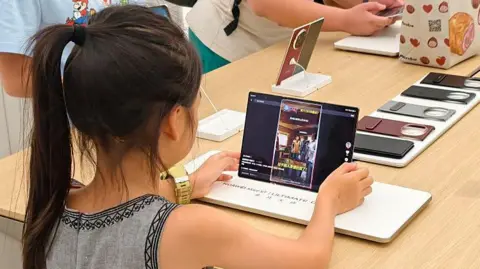

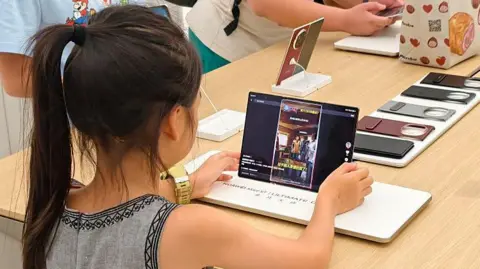

Getty Images

Getty ImagesChina has proposed strict new rules for artificial intelligence (AI) to provide safeguards for children and prevent chatbots from offering advice that could lead to self-harm or violence.

Under the planned regulations, developers will also need to ensure their AI models do not generate content that promotes gambling.

The announcement comes after a surge in the number of chatbots being launched in China and around the world.

Once finalised, the rules will apply to AI products and services in China, marking a major move to regulate the fast-growing technology, which has come under intense scrutiny over safety concerns this year.

The draft rules, which were published at the weekend by the Cyberspace Administration of China (CAC), include measures to protect children. They include requiring AI firms to offer personalised settings, have time limits on usage and getting consent from guardians before providing emotional companionship services.

Chatbot operators must have a human take over any conversation related to suicide or self-harm and immediately notify the user’s guardian or an emergency contact, the administration said.

AI providers must ensure that their services do not generate or share “content that endangers national security, damages national honour and interests [or] undermines national unity”, the statement said.

The CAC said it encourages the adoption of AI, such as to promote local culture and create tools for companionship for the elderly, provided that the technology is safe and reliable. It also called for feedback from the public.

Chinese AI firm DeepSeek made headlines worldwide this year after it topped app download charts.

This month, two Chinese startups Z.ai and Minimax, which together have tens of millions of users, announced plans to list on the stock market.

The technology has quickly gained huge numbers of subscribers with some using it for companionship or therapy.

The impact of AI on human behaviour has come under increased scrutiny in recent months.

Sam Altman, the head of ChatGPT-maker OpenAI, said this year that the way chatbots respond to conversations related to self-harm is among the company’s most difficult problems.

In August, a family in California sued OpenAI over the death of their 16-year-old son, alleging that ChatGPT encouraged him to take his own life. The lawsuit marked the first legal action accusing OpenAI of wrongful death.

This month, the company advertised for a “head of preparedness” who will be responsible for defending against risks from AI models to human mental health and cybersecurity.

The successful candidate will be responsible for tracking AI risks that could pose a harm to people. Mr Altman said: “This will be a stressful job, and you’ll jump into the deep end pretty much immediately.”

If you are suffering distress or despair and need support, you could speak to a health professional, or an organisation that offers support. Details of help available in many countries can be found at Befrienders Worldwide: www.befrienders.org.

In the UK, a list of organisations that can help is available at bbc.co.uk/actionline. Readers in the US and Canada can call the 988 suicide helpline or visit its website.

Published at Tue, 30 Dec 2025 02:32:33 +0000